Review aggregator websites Rotten Tomatoes and Metacritic have become staples of contemporary film culture, making them an integral part of the release and marketing of Hollywood, independent and world cinema. They rose to prominence in the late 1990s as the internet provided wider access to reviews of new film releases by critics working for a broad range of publications. Though different in detail, both Rotten Tomatoes and Metacritic aim to provide a synthesis of the critical community’s reaction to a film in a score of zero to 100. This approach has both been criticised for devaluing film criticism and confusing consensus with quality, and celebrated by those who see aggregators as useful tools for audiences (Kohn, 2017).

Before the internet, film critics’ reviews of the latest film releases mainly featured in local and national newspapers, sometimes accompanied by some form of numbered grade or star system. In the 1970s, US television embraced film criticism, most notably with the review show Sneak Previews (1975-1996) and its subsequent incarnation At the Movies (1982-1999). Both were initially presented by two Chicago newspaper critics Roger Ebert and Gene Siskel, and reached a general audience through national syndication (Poniewozik, 2013). This was a highly concentrated and unified media environment where the mainstream print press and television made up most of the general audience’s contact with non-marketing material on the latest films. With the internet came wider access to reviews from all major publications, along with the decentralization of film criticism away from newspapers and television stations and towards new media websites. The relationship between spectators, film releases and film criticism changed as audiences generated their own publicly available content, fostering online subcultures that engaged critically with established forms of film criticism.

Rotten Tomatoes and Metacritic, founded in 1998 and 1999 respectively, took advantage of this new media environment by providing a platform for film reviews to be collected in one place. Each review is attached to a score along with a short excerpt from the article encapsulating the critic’s opinion. Both websites aggregate these scores into a percentage for each film (with Metacritic also covering games and music). Despite this broad similarity, the methodology each employ to achieve this score and the user interface in which they are shown are markedly different, each spawning its own set of questions and criticisms.

The most significant methodological difference between the two websites is the community of film critics chosen to make up the pool of reviews that determines the “Tomatometer score” and the “Metascore”. Rotten Tomatoes casts a wide net across hundreds of outlets, including established print media like The New York Times, new media film criticism and news websites such as The Dissolve and Indiewire, entertainment and Hollywood-centred publications such as Empire and more obscure digital publications often run by non-professional critics such as Popcorn Junkie and Cinemixtape. The number of reviews varies widely, with a mainstream film such as Argo (2012) garnering around three hundred and an independent film such as Paterson (2016) closer to two hundred. Metacritic opts for a much more restricted approach, with a community of 62 publications, mostly established newspapers (both national and local) and new media outlets of considerable scale such as The Wrap and The Verge (“Frequently Asked Questions”). Given the resources available to such outlets, most films with sizeable distribution in the United States can be expected to pick up at least thirty reviews on Metacritic, with more commercial cinema and high-profile titles from film festivals reaching over forty. Matt Atchity, Rotten Tomatoes’ editor-in-chief, argues that the website’s approach is more “democratic” than Metacritic’s (Atchity, 2012). The former explores the full breadth of film criticism, while the latter retains a sense of exclusivity about what serious film criticism is, a method which lends itself to accusations of elitism.

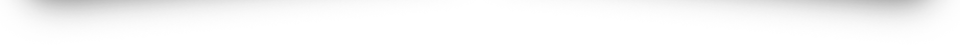

The second most significant difference between the two outlets is the way in which they choose to score reviews. Rotten Tomatoes scores reviews with either a “fresh” or “rotten” rating for a specific film, echoing the practice of throwing rotten food at a performer who fails to impress. The final Tomatometer score represents the overall percentage of fresh (i.e. positive) reviews the film has received from critics, which is displayed alongside another score referring only to “top critics” from the most read and respected publications. Metacritic uses conversion scales, available on its website, which turn a critics’ star or letter grades into a numeric scale between zero and 100. The final aggregated score is established through a weighted average and an undisclosed process of statistical normalization where certain publications’ grades are given more weight than others. In both the Tomatometer and the Metascore, the final score is then described. If a film receives above 60 on either scale it is described as “fresh” or as having “generally favourable reviews” respectively. Below that the Tomatometer describes a film as being “rotten”, while Metacritic describes films with a score between 40 and 60 as “mixed or average”, and 20 to 39 as “generally unfavourable”. Metacritic also describes both ends of the scale, below twenty and above 80, as “overwhelming dislike” and “universal acclaim”, while Rotten Tomatoes only recognises films that have achieved a consistent Tomatometer score of over 75 per cent, and that has never fallen below 70 per cent, as being “certified fresh”.

Rotten Tomatoes’ mostly dualistic rotten/fresh model traces its history back to the thumbs up/thumbs down scale used by Siskel and Ebert in their television show, at a time when reviews were more commonly scored across a scale of four or five stars. Atchity acknowledges this antecedent and describes a 90 per cent fresh score as signifying that “everybody said, at the very least, ‘Yeah, check this out’, [which] doesn’t necessarily mean everybody’s raved about it” (qtd. in Bibbiani, 2015). The logic behind this approach, according to Siskel, was that this was the closest approximation to a normal conversation on a film among general audiences. In this context, the main point of interest regarding a new release was not “a speech on the director’s career” but “should I see this movie?” (qtd. in Ebert, 2008), again echoing the theme of being democratic and making film more accessible.

Critics of the fresh/rotten and thumbs-up/thumbs-down model include Ebert himself, who bemoaned the absence of a “true middle position”. He declared that although he thought “2.5 [out of four] was a perfectly acceptable rating for a film I rather liked in certain aspects”, he ultimately considered this to be a negative review. This runs counter to the Rotten Tomatoes system which translate the 2.5 as a fresh review, reportedly forcing Ebert to change his ratings to accommodate the algorythm (Emerson, 2011).

The problem of conflicts over ratings and conversions doesn’t for the most part affect Metacritic, which allows for a “mixed” and “generally positive” terrain, using critics’ own scores to convert directly into its scale and allowing them to appeal should they disagree with a certain score (“Frequently Asked Questions”). In practice, this means that Rotten Tomatoes “lends itself to higher high scores and lower low scores” (Weinstein, 2012). However, some publications such as Sight & Sound and The Hollywood Reporter publish unscored reviews, which presents issues of interpretation. To name but two: how much weight should a critique of Zero Dark Thirty (2012)’s politics as a “pietà for the war on terror” be given if the review also praises the film as “technically awe-inspiring” (Westwell, 2012)? And how can the combination of Ex Machina (2015)’s “remarkably slick” mise-en-scène versus that of the script’s “muddled rush” third act be quantified (Dalton, 2015)?

Aside from the methodological concerns, perhaps the most important question regarding review aggregators is their impact on a film’s box office performance and post-release success, and how it relates to the websites’ corporate ownership structures. Metacritic’s parent company CNET is part of the CBS Corporation media conglomerate, which owns CBS Films and television stations such as Showtime. Since 2011 Rotten Tomatoes has been owned in part by Warner Brothers, which as of 2019 owns 30% of its parent company Fandango, along with Comcast Universal, which owns a majority stake. In 2017, Rotten Tomatoes was heavily criticized due to suspicions that it had delayed showing a low aggregated score for Warner Brothers’ $300bn blockbuster Justice League (2017). Ordinarily Rotten Tomatoes would start showing an aggregated score at the end of the studio’s review embargo on Tuesday evening, but they only began showing it on Thursday morning just before opening night, raising concerns that this was calculated to safeguard opening weekend box office grosses (Zeitchik, 2017).

However, it is far from clear that the aggregated scores have a meaningful impact on box office grosses. There is little available research on the topic, and the debate surrounding review aggregators tends to focus mostly on blockbuster Hollywood films. For low- and mid-budget films, word of mouth and critics’ reviews on the festival circuit can be decisive for achieving distribution. Upon release however, marketing campaigns for less high-profile films often amplify critical acclaim to attract audiences, making it hard to disentangle the effect of this advertising, or of word of mouth on social media and among friends, from the impact of review aggregators themselves. In the case of blockbusters, the average Metacritic scores for the highest grossing films have been steadily going down (Crockett, 2016). Low-scoring films such as Suicide Squad (2016) and Batman v. Superman (2016), big budget Warner Brothers’ movies, still did extremely well at the box office. This is because by relying on pre-existing source material, an A-list cast and relentless marketing campaigns, major studios have attempted to make their tentpole releases, which constitute the bulk of their yearly revenue, as “critic-proof” as possible (Kiang, 2016).

Despite the shortcomings of both websites, for much of the general audience the two aggregators have become the de facto indicator of critical consensus on a given film, and sometimes the only contact they will have with film criticism before or after watching a film. When the name of a film is typed into Google, its Metacritic and Rotten Tomatoes score will appear alongside its IMDb score (given by IMDb users), followed by short excerpts from reviews by key publications and ordinary filmgoers. A similar feature on YouTube displays the Tomatometer alone, and on IMDb the Metascore sits alongside the site’s own aggregator.

Comparing the two websites, the model used by Rotten Tomatoes is too crude and dualistic when compared to Metacritic’s, which allows for more levels of critical reaction and at least makes some attempt at gauging nuance in a review beyond positive and negative. Despite Rotten Tomatoes’ democratic ethos, its use of a “top critics” score betrays an unspoken understanding that all voices are not equal in the world of film criticism. Any aggregator system that assigns scores to reviews, however representative the author might feel they are of his or her opinion, will never escape criticism for substituting articulated thoughts for a numeric score. A snapshot of a film’s critical reception at the moment of release does risk neglecting divisive but unique works of art and closing off more scholarly approaches to cinema (after all, Citizen Kane [1942] was initially poorly reviewed). Yet the usefulness of these tools must not be fully discounted. In the context of an ever-rising number of yearly film releases and the breadth of film criticism available, aggregators do allow for an estimation of what may contentiously be referred to as the critical consensus on a given film.

References

Atchity, Matt, “I Am the Editor in Chief at Rotten Tomatoes. AMA”. Reddit, 5 Sep. 2012. Web. 16 Feb. 2019.

Bibbiani, William, “Your Opinion Sucks: Matt Atchity dishes on Rotten Tomatoes”. Mandatory, 28 Mar. 2015. Web. 16 Feb. 2019.

Crockett, Zachary, “Big-budget films are getting worse — and we can prove it”. Vox, 4 Apr. 2016. Web. 16 Feb. 2019.

Dalton, Stephen, “’Ex Machina’: Film Review”. The Hollywood Reporter. 16 Jan. 2015. Web. 16 Feb 2019.

Ebert, Roger, “You give out too many stars”. Rogerebert.com, 14 Sept. 2008. Web. 16 Feb. 2019.

Emerson, Jim, “Misinterpreting the Tomatometer”. Rogerebert.com, 16 Jun. 2011. Web. 16 Feb. 2019.

“Frequently Asked Questions.” metacritic.com. Web. 18 Feb. 2019.

Kiang, Jessica, “Too Big To Fail: What ‘Batman v Superman’ Tells Us About Blockbuster Culture”. Indiewire, 28 Mar. 2016. Web. 16 Feb. 2019.

Kohn, Eric, “Rotten Tomatoes Debate: Critics Discuss Whether the Service Hurts or Helps Their Craft”. Indiewire, 27 Jun. 2017. Web. 16 Feb 2019

Lattanzio, Ryan, “A.O. Scott Lampoons Studios’ Treatment of Critics via Twitter”. Indiewire, 8 Jul. 2013. Web. 16 Feb. 2019.

Poniewozik, James, “Why Roger Ebert’s Thumb Mattered”. TIME, 5 Apr. 2013. Web. 16 Feb. 2019.

Weinstein, Joshua, “Movie review aggregators popular, but do they matter?”. Reuters, 17 Feb. 2012. Web. 16 Feb. 2019.

Westwell, Guy, “Zero Dark Thirty”. Sight and Sound, 23.2 (2012): 86-87. Print.

Zeitchik, Steven, “Rotten Tomatoes under fire for timing of ‘Justice League’ review”. Washington Post, 16 Nov. 2017. Web. 18 Feb. 2019.

Written by Ricardo Silva Pereira (2019); Queen Mary, University of London

This article may be used free of charge. Please obtain permission before redistributing. Selling without prior written consent is prohibited. In all cases this notice must remain intact.

Print This Post

Print This Post